Perception and Navigation for Heavy-Duty Machines

Towards robust perception and navigation solutions for heavy-duty machines operating in all-weather conditions

Heavy-duty machines operate in highly complex, unstructured, and cluttered environments like forests, construction, and mines. Several challenges arise when working in these complex environments due to environmental conditions like low visibility during adverse weather for outdoor sites and low illumination areas like mines. These working conditions put a cognitive burden on the operators and make the operation prone to accidents for the operators and the humans working near the machines. This leads to the increasing demand for semi-autonomous or autonomous systems either to reduce the burden on operators or to have completely autonomous systems. These autonomous solutions could include object detection, mapping, and navigation in the environment in challenging conditions requiring perception sensors like cameras, lidars, and radars. In this newsletter, we take a closer look into the usage of perception for detection and navigation for heavy-duty mobile machines (HDMMs). The first article describes how lidar sensors can be used effectively in a forest environment, while the second article explains how radar sensors could be effective for navigation in any scenario.

Articles

- LiDAR-based perception in a forest environment

Robust tree detection and navigation in an unstructured forest environment - Radar Perception for Heavy-Duty Mobile Machines

Ego-motion estimation using high-resolution radar data

LiDAR-based perception in a forest environment

Robust tree detection and navigation in an unstructured forest environment

Forestry machines operate in highly cluttered and unstructured forest environments, increasing the burden on operators, leading to labor shortages, and necessitating automation for perception and navigation tasks. However, developing reliable navigation and perception algorithms for autonomous forestry machines is particularly challenging due to their complex environmental conditions. For autonomous navigation, lidar or radar sensors with Global Navigation Satellite Systems (GNSS) systems are utilized with Simultaneous Localization and Mapping (SLAM) algorithms to solve the problem of estimating a robot’s trajectory and environment map based on various measurements. However, (GNSS) suffers from signal blockage and reflection issues in a forest environment. Therefore, relying on range sensors like lidars, sonars, and radars for mapping and localization becomes crucial.

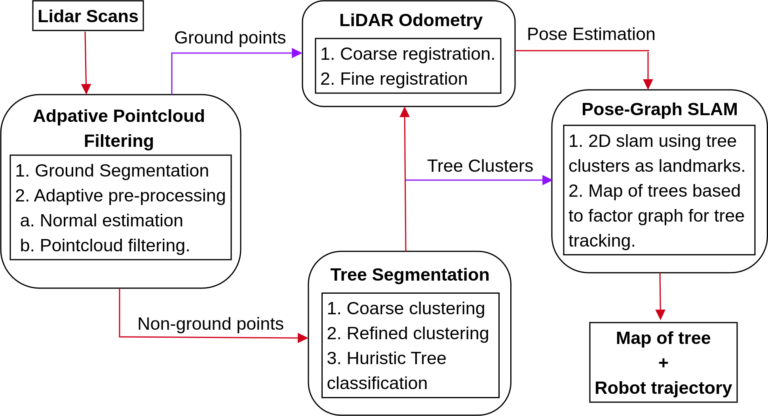

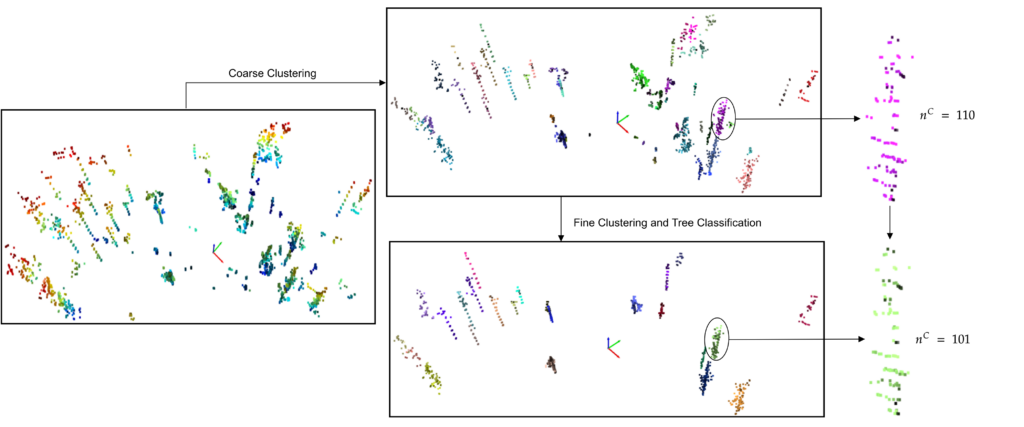

A crucial task for forestry applications is detecting and cutting trees, which are also treated as obstacles for navigation systems to avoid. In our work [1], we proposed a unified approach to detect trees and use them for SLAM, as shown in Fig 1. AI solutions for object detection are quite common using camera sensors, which also generalize well for images from different camera sensors. However, generalized object detection approaches for lidar sensors are still not available. Hence, we developed an analytical system for robust tree segmentation that works with different lidar sensors and is robust against weather noise. The method includes an adaptive approach for normal estimation and point cloud filtration, addressing challenges posed by sparse pointclouds. We introduced a heuristic technique for tree extraction from filtered data. Fig 2 shows the steps involved in tree segmentation. Segmented trees can also be used for tree diameter estimation and tree logging.

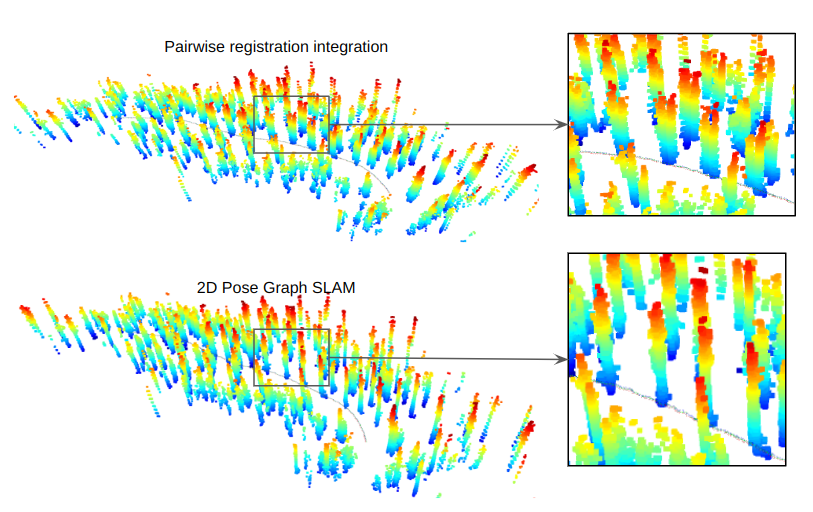

Besides detecting obstacles, the lidar sensor is also essential for SLAM tasks. A significant aspect of perception-based SLAM is scan registration, which estimates robot motion between time instances using visual data. However, conventional SLAM algorithms based on generalized features struggle in forestry conditions due to accuracy impairments in point clouds and lack of relevant features. Our work [1] proposed an enhanced SLAM method tailored for forest environments, leveraging the detected tree in the previous step, bolstering scan registration robustness. The proposed technique employs shape-2-shape registration for initial pose estimation and refines using ICP point-2-plane [2]. The outcome of scan registration and tree detection contribute to localization and mapping via a landmark-based graph SLAM algorithm implemented using the GTSAM library [3]. Fig 3 shows the forest map using the pairwise scan registration and tree-based scan registration. This article shows how lidar sensors can be effectively used in complex environments like forestry for operator assistance or autonomous navigation of forest machinery.

About the author

Himanshu Gupta

Early Stage Researcher 5

Himanshu is a 3rd year PhD researcher at Örebro University, Sweden working on robust perception in adverse weather conditions. He has recently started his internship at GIM Robotics, Finland with research focused on robust object detection and navigation in all-weather conditions using cameras and lidars.

Radar Perception for Heavy-Duty Mobile Machines

Ego-motion estimation using high-resolution radar data

Environmental understanding is pivotal for the successful operation of autonomous machines, especially as they navigate diverse and often unpredictable operational terrains. Heavy Duty Mobile Machines (HDMMs), particularly those used in underground mines or construction sites, face uniquely demanding challenges. In locations where Global Navigation Satellite Systems (GNSS) are rendered ineffective or denied, the onus falls on onboard sensors to perform tasks such as localization, mapping, and Simultaneous Localization and Mapping (SLAM). Sensors like lidar and cameras are commonly employed for these purposes. The camera and lidar sensors offer high-resolution data under optimal conditions, but adverse visibility scenarios or during inclement weather conditions severely hamper the efficacy of these sensors. However, radar sensor performance is nearly unaffected by those scenarios. Radars are recently been explored for tasks like ego-motion estimation and SLAM [4].

We have focused on SoC(system on chip) radar sensors as shown in Fig 4, which have three-dimensional sensing capabilities; their angular and range resolution is better than the previous generation of series single-chip radar sensors. Also, these radar sensors measure the Doppler velocity, which can help us to detect and avoid dynamic objects and obstacles.

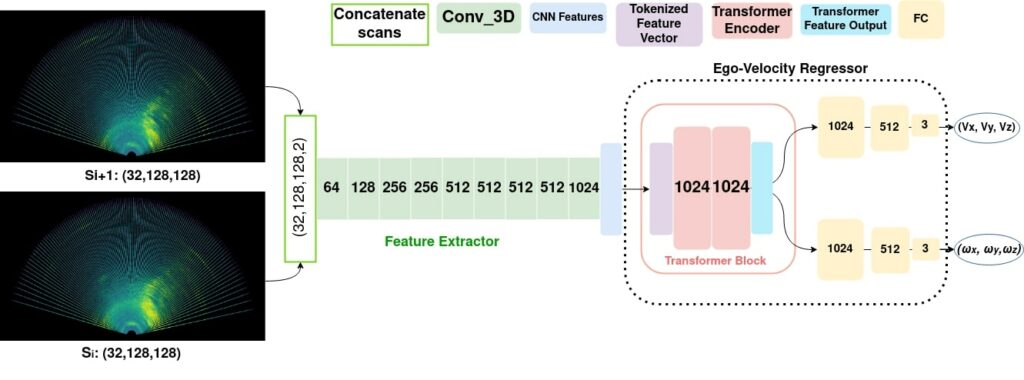

We are exploring high-resolution automotive radars for solving the above tasks. The problem with radar data is its noise in the form of clutter and multi-path reflection, making it complex to use with the algorithms. There are methods available with the Doppler radars for ego-motion estimation, which relies on the processed data points. A traditional radar signal processing pipeline loses a lot of valuable data. With this idea, we proposed an end-to-end learning-based ego-motion estimation method in our paper [2], which learns the linear and angular motion of the sensor platform from the radar scan. We use the three-dimensional radar scan discretized in range, azimuth, and elevation bins; each bin has the intensity of reflection. We use a deep neural network architecture with two parts (Fig 5): a features extractor to extract the significant features and an ego-velocity regressor that predicts the ego-motion from a pair of radar scans. We use 3D CNN in the feature extractor and transformer encoder in the ego-velocity regressor. The network has been trained with the lidar-inertial ground truth ego-motion and evaluated in different scenarios.

We are also working on high-resolution signal processing techniques to denoise the radar data for tasks like occupancy mapping and localization in a given radar map. Other ideas are to identify radar sensors’ shortcomings and limitations and address them using other sensors like IMUs. A multi-modal sensor setup will lead to more robust and reliable solutions for higher autonomous navigation and operation.

References

Header image courtesy of Bosch Rexroth AG

[1] H. Gupta, H. Andreasson, A. J. Lilienthal, and P. Kurtser, “Robust Scan Registration for Navigation in Forest Environment Using Low-Resolution LiDAR Sensors,” Sensors, vol. 23, no. 10, p. 4736, May 2023, doi: 10.3390/s23104736.

[2] Low, Kok-Lim. „Linear least-squares optimization for point-to-plane icp surface registration.“ Chapel Hill, University of North Carolina 4.10 (2004): 1-3.

[3] F. Dellaert, and GTSAM Contributors. borglab/gtsam, 2022. https://doi.org/10.5281/zenodo.5794541.

[4] Kellner et al., Instantaneous Ego-Motion Estimation using Doppler Radar, IEEE ITSC 2013

[5] Rai et al. 4DEgo: ego-velocity estimation from high-resolution radar data, Frontiers in Signal Processing, 2023

About the author

Prashant Kumar Rai

Early Stage Researcher 7

Prashant is a 3rd year PhD student at Tampere University, Finland. He is working on localization, egomotion estimation and mapping in GNSS denied conditions using high resolution imaging radars.